In a previous post, I described how to run AWS DynamoDb locally using the AWS supplied docker image. I’ve recently been doing some work where I’m benchmarking code that works against AWS services. For example, I’m working to optimise speed and reduce allocations when downloading and parsing a file from AWS S3. I was curious if I could find a way to take networking out of the mix when timing and benchmarking this code.

In this post, I will demonstrate how to run the LocalStack Docker image locally to support local versions of additional AWS services. Specifically, I’ll show you how to run S3 using the LocalStack image and then cover how to set up your AWS SDK C# client to connect to the local S3 service from a .NET Core console application.

Running the LocalStack Docker Image

I decided to add LocalStack to a Docker Compose file which I use when developing applications. My Docker Compose spins up various services such as ElasticSearch, PostGres and Redis. I chose to add a new service to the end of my compose file. Here’s a reduced sample…

Let’s walk through the lines of the service:

The first line defines the image to use for the service. Here I’m using the latest LocalStack image from the Docker Hub.

Next, I set a container name so that I can easily identify the running container later.

Next, I define some environment variables to configure the LocalStack service. Their GitHub site explains all of the options available. Here I use the SERVICES variable to define the AWS services that will run inside the image. I’ve chosen to run only S3 for now and to have it run on port 5002 within the container.

I set the DEFAULT_REGION to match the region I use in production most of the time.

Finally, I set the DATA_DIR to enable persistent data. Without this, no data would be retained after restarting the container. By default persistence is disabled. Persistence supports storage of data from the Kinesis, DynamoDB, Elasticsearch and S3 services.

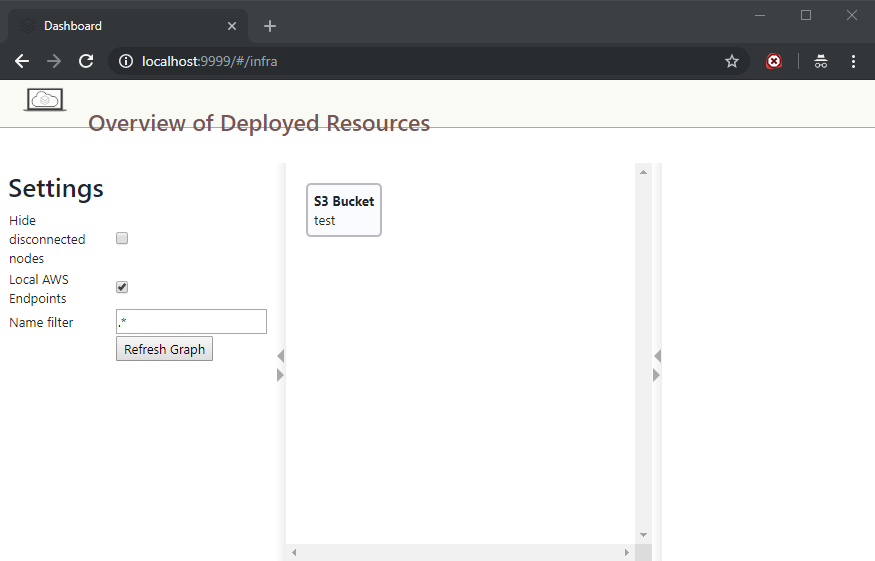

Next, I expose two ports. The first maps port 5002 in the container which is where I’m running AWS S3 to my host port 5002. This will allow us to connect later. The second port mapping is to publish the port for the basic web UI, which run on port 8080 inside the container to port 9999 on my host machine. This will allow us to view any resources created within LocalStack.

Finally, I include a volume that mounts the persisted data inside the container onto my host. This ensures that my data can be used even after the container has been removed.

The UI on my system (after creating an S3 bucket) looks like this…

Working with LocalStack from a .NET Core Application

In order to test the LocalStack S3 service, I created a basic .NET Core based console application. You’ll need to bring in the relevant NuGet package for the service you wish to work with. In my case, I added the following package reference to my project file.

<PackageReference Include=”AWSSDK.S3″ Version=”3.3.31.13″ />

Next, we need to create a client instance to work with. The simplest way to do this for testing is to new one up directly…

In order to get the client to work with the local service, we can provide the service URL it should use. In this case, it’s running under localhost and port 5002 which we specified in the docker-compose ports section.

The ForcePathStyle setting also needs to be set since by default the client expects to append the bucket name to the domain name in order to access the bucket. Since this would affect the localhost name we’re using, forcing path style gets everything working properly.

That’s basically everything and once we have a client we can make the usual AWS calls to perform supports actions against those services. There is no authentication needed to make calls to the services.

In this example, we create a new bucket and transfer a file to it.

Summary

LocalStack seems like a great solution to a few problems facing cloud development. For example, I can now develop offline whilst travelling without any problems. I can perform some load testing of services without incurring costs and I can benchmark and test my code more accurately. The pro edition also looks to support CI integration scenarios if you want to perform any repeatable integration tests as part of your CI process.

Have you enjoyed this post and found it useful? If so, please consider supporting me: