Last week I read about a new Docker ECS Integration experience, and it piqued my interest. I immediately put it onto my list of things to try out and experiment with. After spending an hour or so with the tooling over the weekend, my early impression is that this CLI feature might be quite useful. In this post, I will briefly explain what the integration looks like today and how to get started with it.

Another useful resource is to watch a recording from the recent AWS Cloud Container Conference @ 2hr 18 mins. This includes a session and demo about the integration by Chad Metcalf, from Docker and Carmen Puccio, from AWS.

Introducing the Beta Docker ECS Integration

The goal of the tooling is to support the easy deployment of local resources into AWS running inside ECS. A potential use case of this integration is to support rapid experimentation and development of cloud-native, containerised service architectures. Today, we can rapidly prototype architectures with Docker locally.

I often use a docker-compose file to spin up local versions of dependencies such as Redis when running in development. At times, I may also spin up local versions of internal services using Docker. This is extremely convenient in the local scenario.

When it comes time to run our containers in the cloud, things become a little more involved. We use a custom deployment flow defined using Octopus Deploy which creates necessary services and service dependencies with the help of CloudFormation files. Running containers in ECS not only requires pre-requisites such as having an ECS cluster configured, but also for us to define a Task Definition. This file is similar to the docker-compose file, in that we define the details of the container(s) we wish to run. Despite being similar in their effect, these files differ in structure. Our tooling and developer teams therefore need to understand how to work with both. Wouldn’t it be nice if we could prototype our services in Amazon ECS more quickly?

The teams at AWS and Docker have been working together to partner on a new integration experience. With the ECS integration for Docker, we can quickly deploy services directly into AWS ECS (Elastic Container Service) using the Docker CLI. ECS services are started to run your docker-compose workloads using the AWS Fargate serverless compute engine. Dependencies such as an ECS Cluster and VPC are also managed for you.

At the moment, this new tooling is implemented as a Docker plugin. This supports rapid iteration of the tooling experience as it evolves. When the tooling goes GA, the expectation is that it will be more embedded into the existing Docker commands that we use today, such as docker run and docker-compose.

Note: This is a beta product, and already there have been some significant changes between beta one and beta two. Expect this to continue to evolve and for features to be added/removed as it develops. I’m using Docker Desktop Edge (2.3.3.1) in this post, along with the beta 2 version of the plugin.

Getting Started with the Docker ECS Integration Beta

The resources and documentation are entirely new, and the tool is evolving, so I ran into a few issues and missing details when I started testing the CLI integration. Through trial and error I was able to solve to get a sample application running. I’ll describe the steps I took, which are current at the time of publishing this post.

Installing Docker Desktop for Windows (Edge)

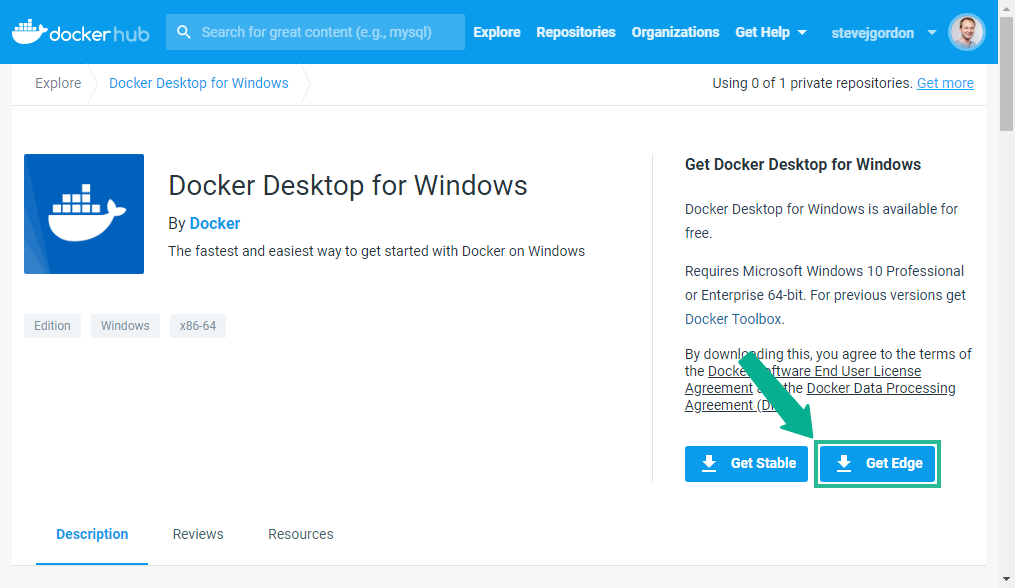

The first pre-requisite is to ensure that you have Docker Desktop 2.3.3.0 or later installed. At the time of writing, this is available as an “Edge” version of the product. We need this version to access the newer features required for the tooling to work. The Edge version can be downloaded from Docker Hub.

After installing Docker Desktop Edge, I wanted to test that the new CLI command was working. I tried running the docker ecs version command, as suggested in the ECS integration documentation.

C:\>docker ecs version

docker: 'ecs' is not a docker command.

See 'docker --help'

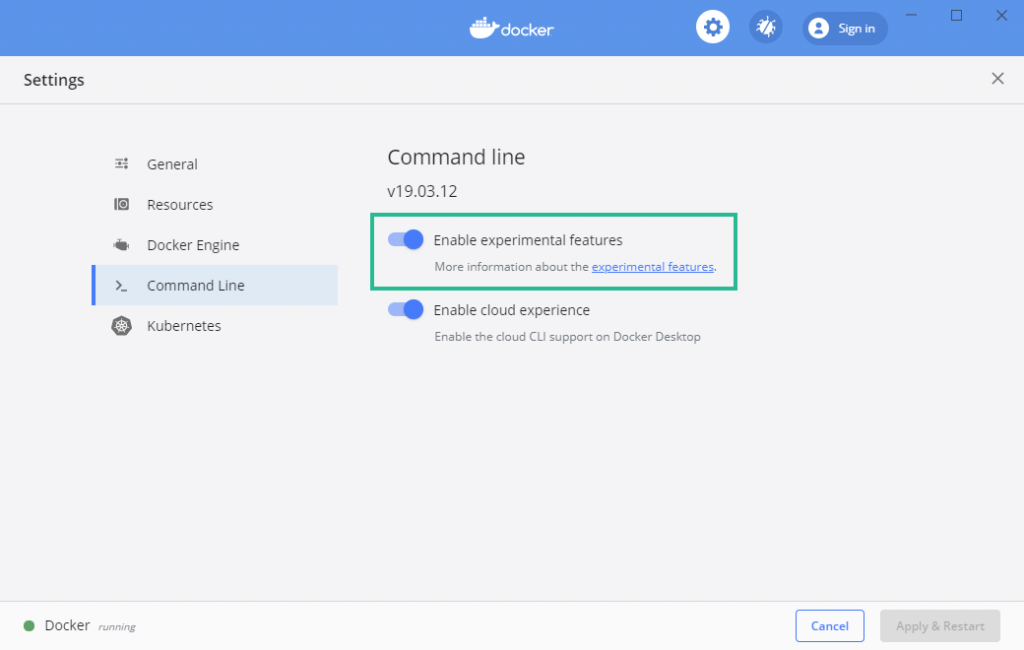

Somewhat curiously, it appeared that the new command was not working despite the installation. The first thing I tried was a restart of my PC, but this also did not solve the problem. I soon realised that I needed to opt-in to the experimental features by configuring the command line settings for Docker Desktop.

After this change, the version command was working as expected…

C:\>docker ecs version

Docker ECS plugin v1.0.0-beta.1 (12a47cb)

Running Docker ECS Setup

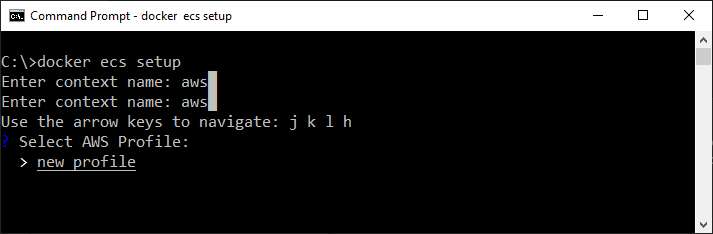

The next step is to set up the integration by running docker ecs setup. This walks you through providing the information required by the tool to create a Docker context. A context provides Docker with details about where and how commands should execute. The default context is the local context which runs Docker commands locally to your development machine.

We can create a context to support working with Docker against AWS by using the setup command. The command will ask you a series of questions to complete the creation of a context.

C:\>docker ecs setup

Enter context name: aws

v new profile

Enter profile name: demo

Enter region: eu-west-2

Enter credentials: y

Enter AWS Access Key ID: MADEUP123KSVUIN62DOY1

Enter AWS Secret Access Key: ****************************************

Enter Context Name:

This is a name for the Docker context. I used “aws”.

Enter Profile Name:

The setup process will check for existing named profiles in the .aws/config file under the current user profile. You may choose to select and use an existing profile, or create a new one.

I let the tool create a new profile, although I noticed that the profile is not added to the config file automatically.

Enter Cluster Name:

In my early attempts, I provided a cluster name, assuming this was the name to be used when creating a cluster. It turns out no, it’s the name of an existing cluster. So my original attempts failed to deploy because the cluster I had specified did not exist. I learned by re-watching the demo that I should leave this blank to create a new cluster automatically.

Note: This experience is improved in beta 2, which no longer asks for this information during context setup. Instead, an additional property x-aws-cluster can be added to the docker-compose to specify an existing cluster. When not present, a new cluster is used.

Enter Credentials:

I then provided the AWS Access Key ID and AWS Secret Access Key for an IAM user with sufficient privileges in AWS. The IAM user (or role) requires several permissions to support creating and managing a range of services in AWS.

The requirements document in the GitHub repository lists the necessary permissions as:

- ec2:DescribeSubnets

- ec2:DescribeVpcs

- iam:CreateServiceLinkedRole

- iam:AttachRolePolicy

- cloudformation:*

- ecs:*

- logs:*

- servicediscovery:*

- elasticloadbalancing:*

A new entry for the credentials, matching my profile name is created in the .aws/credentials file. As mentioned above, the corresponding profile does not seem to be added into the config file though, which may be a bug.

Creating the docker-compose.yml File

In my last post, “Pushing a .NET Docker Image to Amazon ECR“, I covered the steps which are necessary to create a basic Docker image for a .NET Worker Service. I then built and pushed a Docker image which can run the work service into Amazon ECR. I decided to attempt to run that image using the Docker ECS CLI.

The first step is to produce a docker-compose file with the service(s) to be run. Here’s the elementary file which I created.

version: '3.8'

services:

worker-service:

image: 123456789012.dkr.ecr.eu-west-2.amazonaws.com/ecr-demo:latest

The docker-compose file uses a YAML format to specify one or more services that you wish to start. These can build and run local Docker images, and also (as in this case) reference existing images from a repository.

My docker-compose file defines a single service named “worker-service” and references an existing image from my private ECR repository. This image will be used to start an instance of the service as a container. Under regular use (local Docker context), this file could be used locally with the docker-compose up -d command to start an instance of this worker service as a local Docker container.

docker-compose up -d can be used to start the container, with the -d switch running it in detached mode which avoids the stdout from the container being piped into the console.

docker-compose down can be used to stop the instance when we’re done with it.

More commonly, docker-compose is used to define and run multiple containers which are required to run together. The Docker ECS integration supports this too, but for a simple example, this single service will do just fine.

Switching the Docker Context

Having run the compose file locally, during development, it might be useful to run the service(s) directly in AWS. AWS supports two main managed container services, ECS (Elastic Container Service) and EKS (Elastic Kubernetes Service). At the present time, the integration is focused on ECS. The standard steps to run a container in ECS would require some setup to create an ECS cluster, define a service and task definition and perhaps even other prerequisites such as setting up a VPC. The new Docker ECS integration takes care of this all for us.

First, we must switch our Docker context to our “aws” context, so that commands use AWS as the deployment target. We can switch context with a simple Docker command.

docker context use aws

Docker ECS Compose Up

The next step is to trigger the creation of the required services to run the service in ECS. Today, the command is a plugin, so it’s not a direct match for the local “docker-compose” command. In the future, once ready for release, it sounds like this is planned to work using docker-compose directly.

The command we can use with the beta is:

docker ecs compose up

This command needs to be run in the directory that contains a valid docker-compose file, or a file flag (–file) needs to be used to specify the path to the compose file.

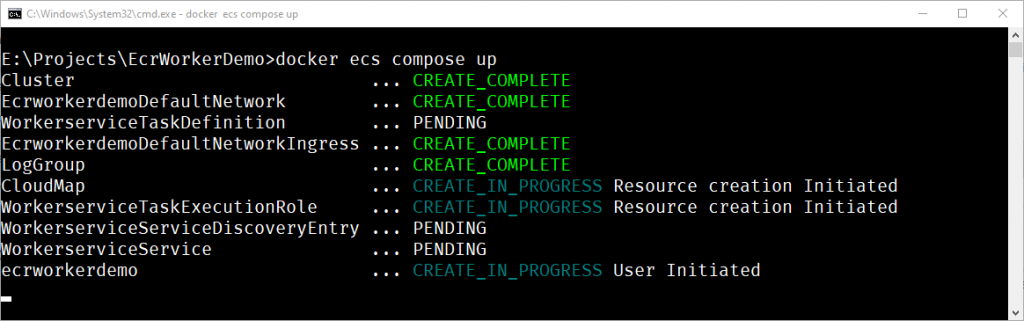

After a few moments, the console provides an output showing the status of the deployment. I’ll explain the output a little further in this post. I ran into two main issues with my first attempt, which I want to talk about before proceeding.

Firstly, when I viewed the Task Definition being created in ECS, it was prefixing the docker.io URL to my image name:

docker.io/123456789012.dkr.ecr.eu-west-2.amazonaws.com/ecr-demo:latest

Despite the documentation including a mention of ECR being supported, this didn’t seem to be working for me.

A second issue I noticed was that despite my service being a basic worker, with no network ports exposed, the tooling was attempting to create a load balancer for the service. This is redundant for a worker service.

I turned to the Docker ECS Plugin repository on GitHub to learn more. Initially, I raised an issue for the problem I was having with using an ECR image. However, after digging through the code and commits, I noticed that in fact, some changes had already been made.

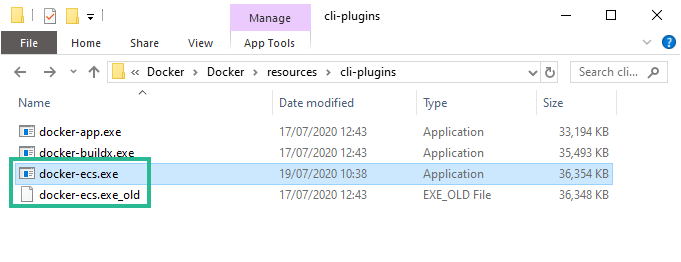

After checking out the releases for the repository, I spotted a newer 1.0.0-beta.2 version had been released 2 days earlier. Having run the version command earlier, I was aware that my current version was beta.1.

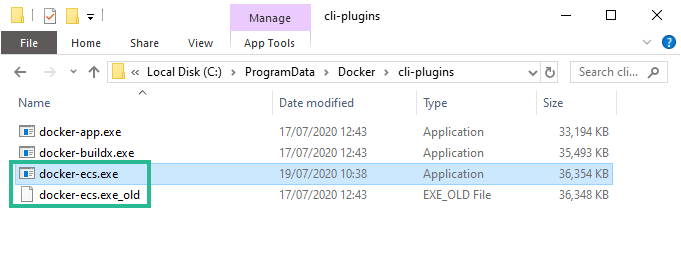

I downloaded the new plugin and spent a few minutes figuring out how to “install” it as the default version. In the end, I found two instances of the beta 1 executable, which I replaced on my file system.

C:\Program Files\Docker\Docker\resources\cli-plugins

C:\ProgramData\Docker\cli-plugins

After replacing these executables, I re-run the docker ecs version command to check that my change had taken effect.

C:\>docker ecs version

Docker ECS plugin v1.0.0-beta.2 (6629d8e)

Eager to continue, I tried running the docker ecs compose up command again.

I was hit with a new problem…

this tool requires the "new ARN resource ID format"

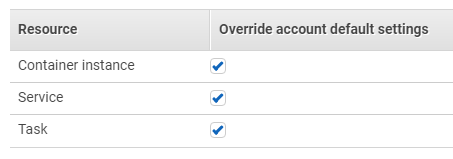

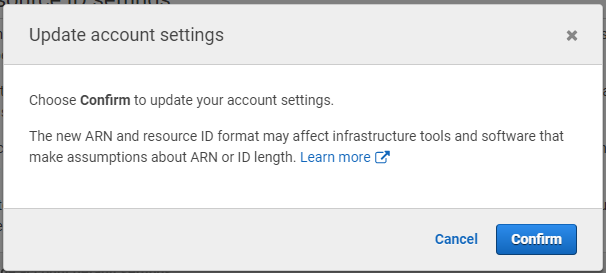

Fortunately, I was vaguely aware of what this error meant as I had previously read about this. Under the ECS console, it is possible to configure the account settings. Here, we can choose to allow the use of the new format for ARN resource IDs.

This is safe in my case as I’m testing this all under a special demo account I maintain in AWS. As the console will advise, more care needs to be taken for accounts with existing resources.

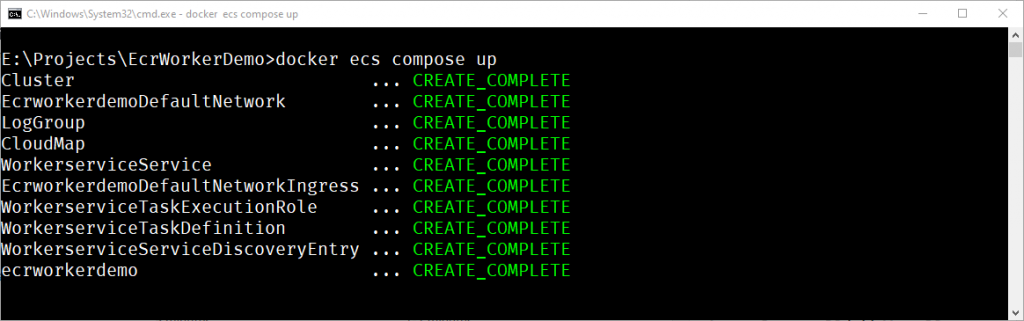

After updating this setting, I once again tried running the docker ecs compose up command. This time, things were looking better and the command began executing.

Under the hood, the Docker ECS plugin creates a CloudFormation file defining all of the required services necessary to run an ECS cluster. For each service defined in the docker-compose file, it will register a task definition and run an instance of the service in the ECS Cluster, using AWS Fargate. It does this by parsing the docker-compose file to determine which service(s) it needs to create.

This CloudFormation is used to create a stack in AWS which will create and configure the necessary services.

If you’d like to view the CloudFormation file which is generated, you can use the docker ecs compose convert command. This will cause the CloudFormation file to be written out to your console.

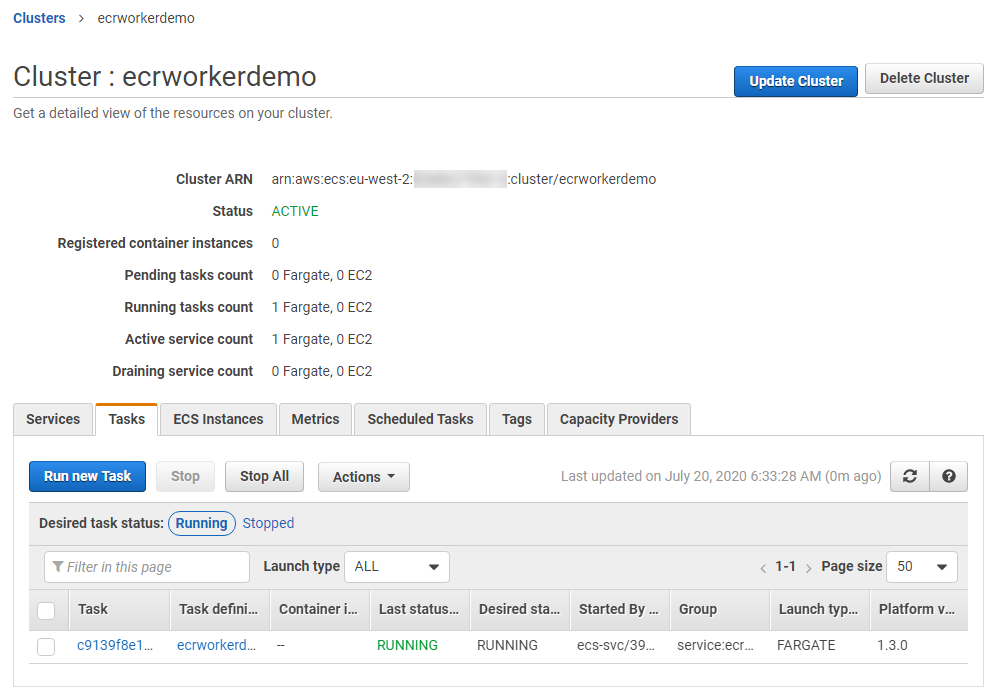

After the creation completes, it’s possible to view the cluster in AWS ECS. In the image below, we can see that the cluster is using the Fargate compute engine.

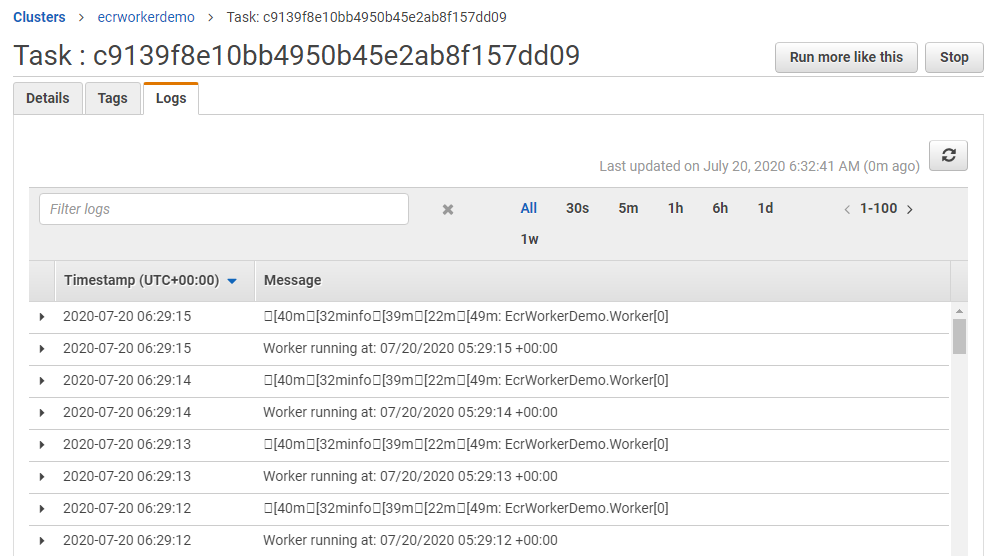

My docker-compose file specifies a single worker service image and as a result, a single task is started inside the ECS cluster. My worker service is built from the default worker service template which does nothing but log to the console periodically. It possible to confirm that it is working by checking the logs for the running task.

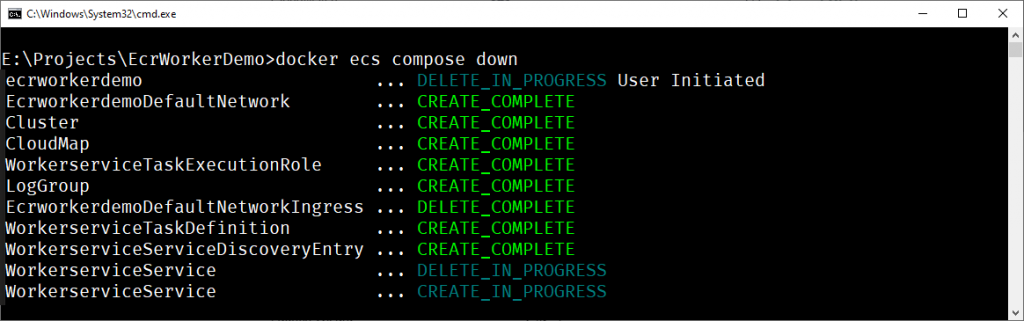

One of the really nice things about this new plugin is that we can easily clear down the resources once we’re done. This helps avoid the cost of running instances of the tasks when we no longer need them. A developer may choose to start up some development resources at the beginning of their day and shut them down once their day is over. This is very similar to the local Docker context pattern, but now running inside AWS.

Summary

It’s still early days for this integration which is under active development by the teams at AWS and Docker. While I ran into a few teething problems with my initial attempts, once I got things working, it’s pretty slick. One area I plan to explore more is how this could fit into a nice integration testing scenario, where I want to spin up some services under test without a great deal of ceremony. For such scenarios, it would be nice if we could perhaps specify additional CloudFormation services that should be created when running the command.

I’ll be watching this integration as it progresses and perhaps try out some more real-world scenarios with it again soon. If you’re already familiar with using docker-compose files locally, there will not be a huge leap to use the same YAML file to quickly deploy instances of services directly into AWS ECS.

Have you enjoyed this post and found it useful? If so, please consider supporting me: